Bayes' Theorem in Decision Making

1 พฤษภาคม 2566 - เวลาอ่าน 7 นาที

Suppose that every time there is a traffic jam, you collect data. It rains about half the time when there's a traffic jam. You might ask the reverse question: when it rains, what is the chance of a traffic jam? It could be equal to half, more than half, or less than half. To find the answer to this, you can use Bayes' theorem.

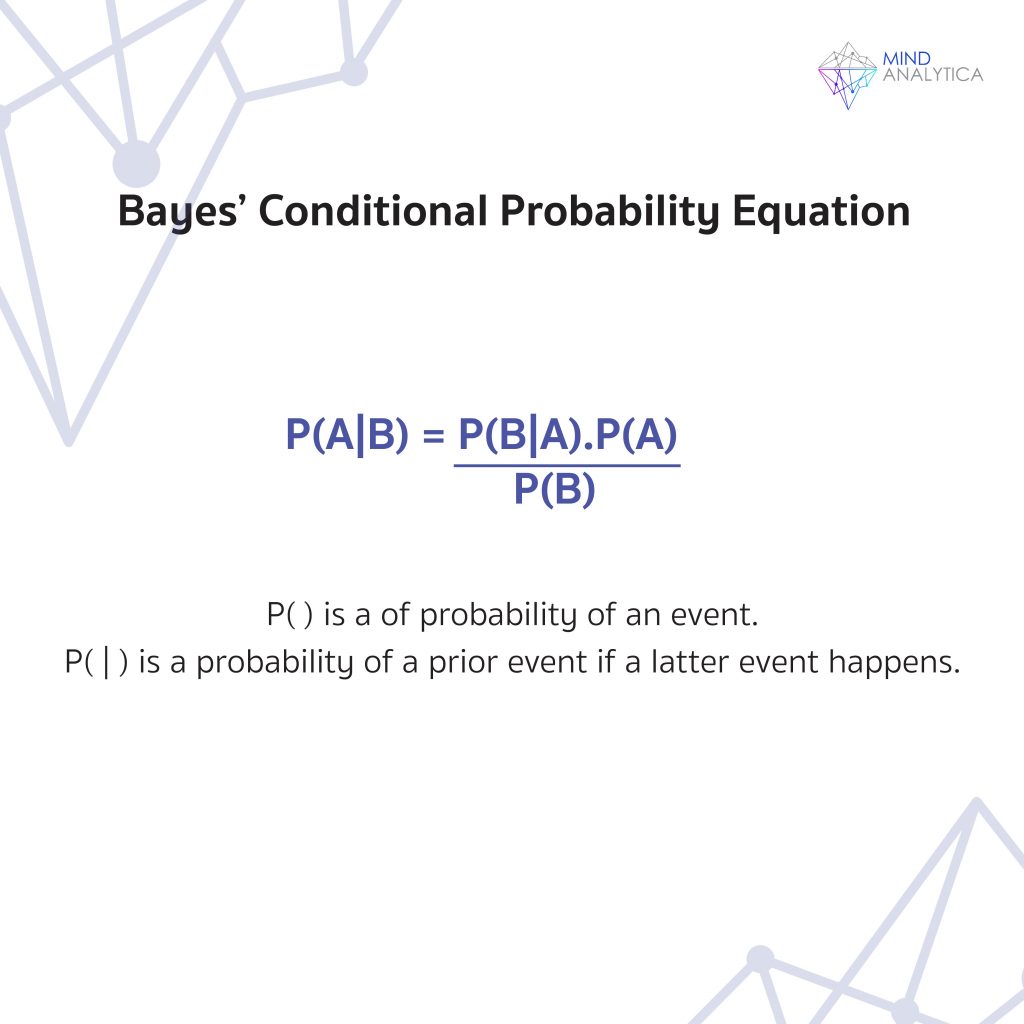

Let's write it as a mathematical equation. I'll minimize the mathematical notation as much as possible.

Let p( ) represent the probability of an event occurring

and p( | ) represents the probability of the event in front occurring if the event behind occurs

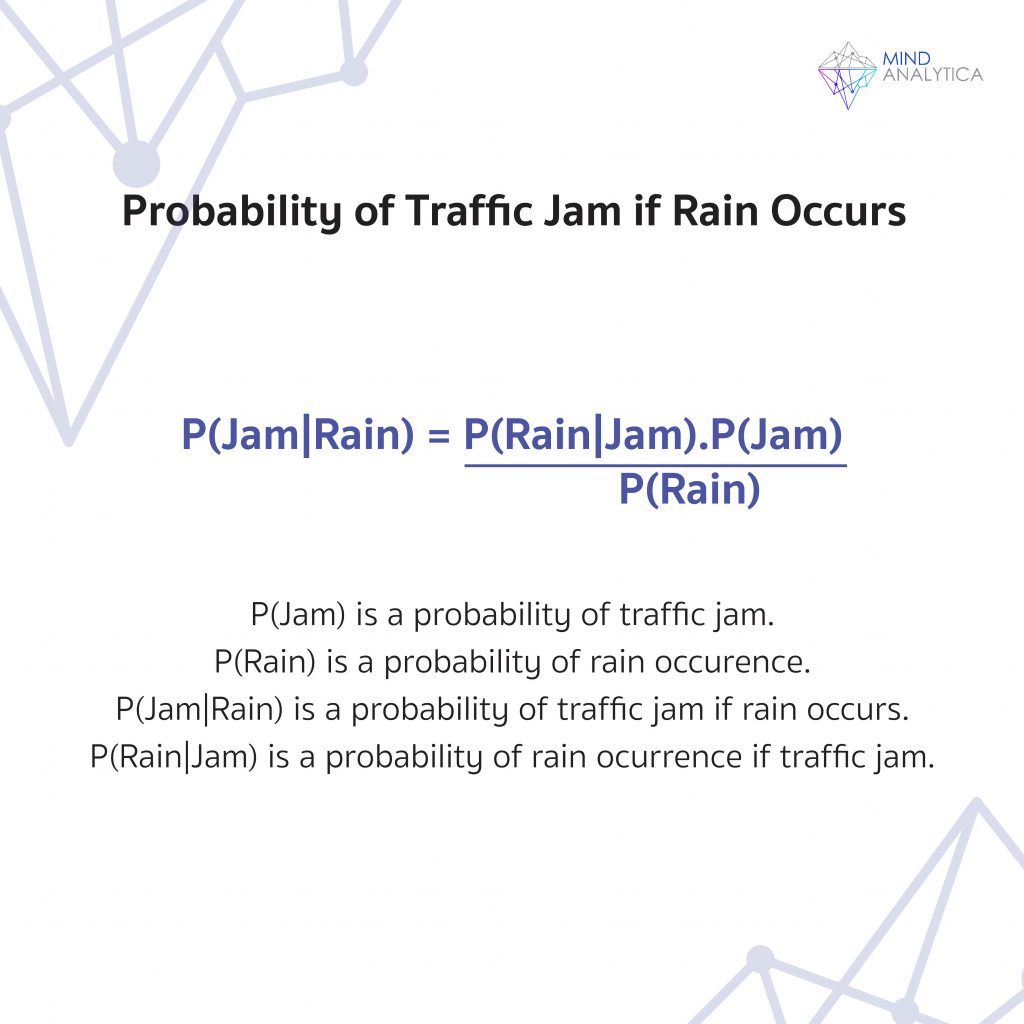

For example,

"p(traffic jam) is the probability of a traffic jam occurring,"

and "p(rain | traffic jam) is the probability of rain if there is a traffic jam." From the first paragraph, p(rain | traffic jam) equals 50%.

According to the first paragraph, we want to find "p(traffic jam | rain), which is the probability of a traffic jam on a rainy day." Thomas Bayes proposed the following method for calculating the reverse conditional probability:

p(traffic jam | rain) = p(rain | traffic jam) p(traffic jam) / p(rain)

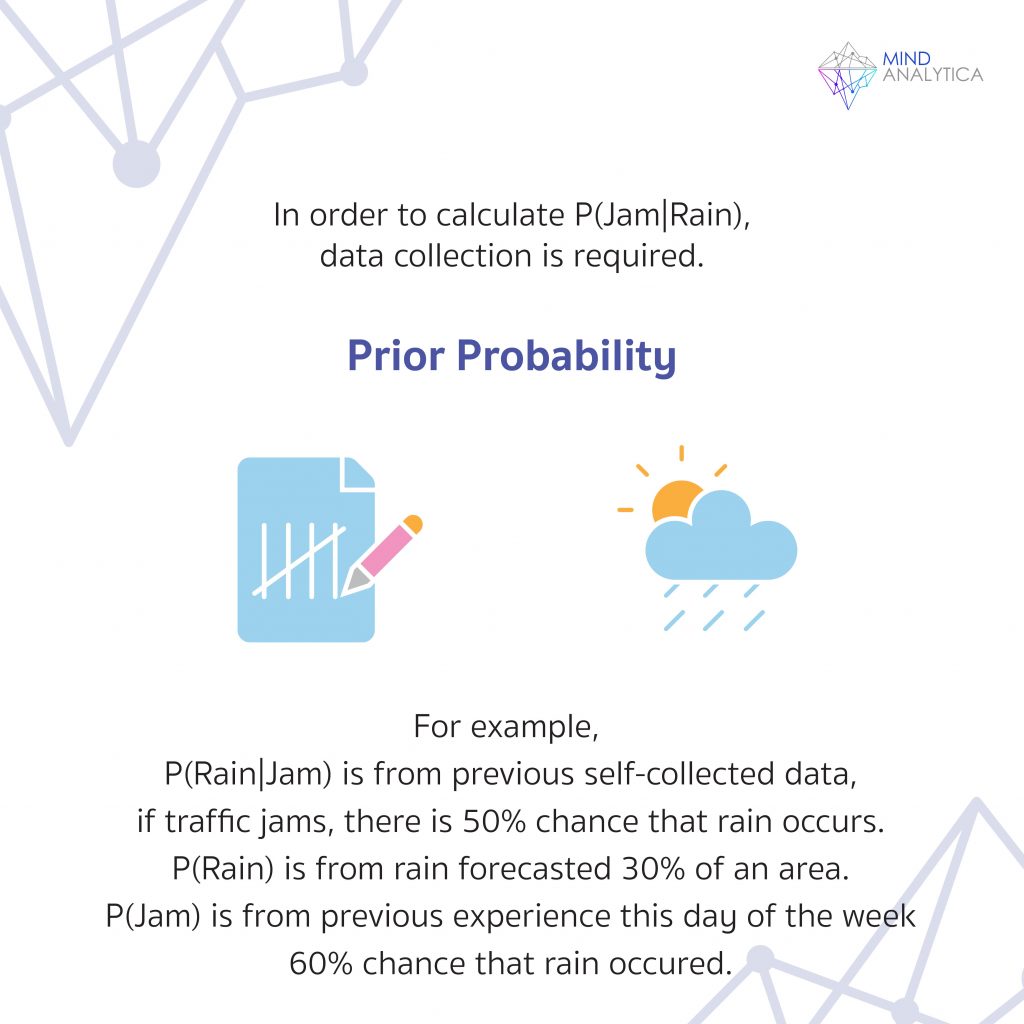

We know from the first paragraph that p(rain | traffic jam) is 50%.

As for p(rain), we can look at the weather forecast to determine the percentage of rain in the area; let's say it's 30%.

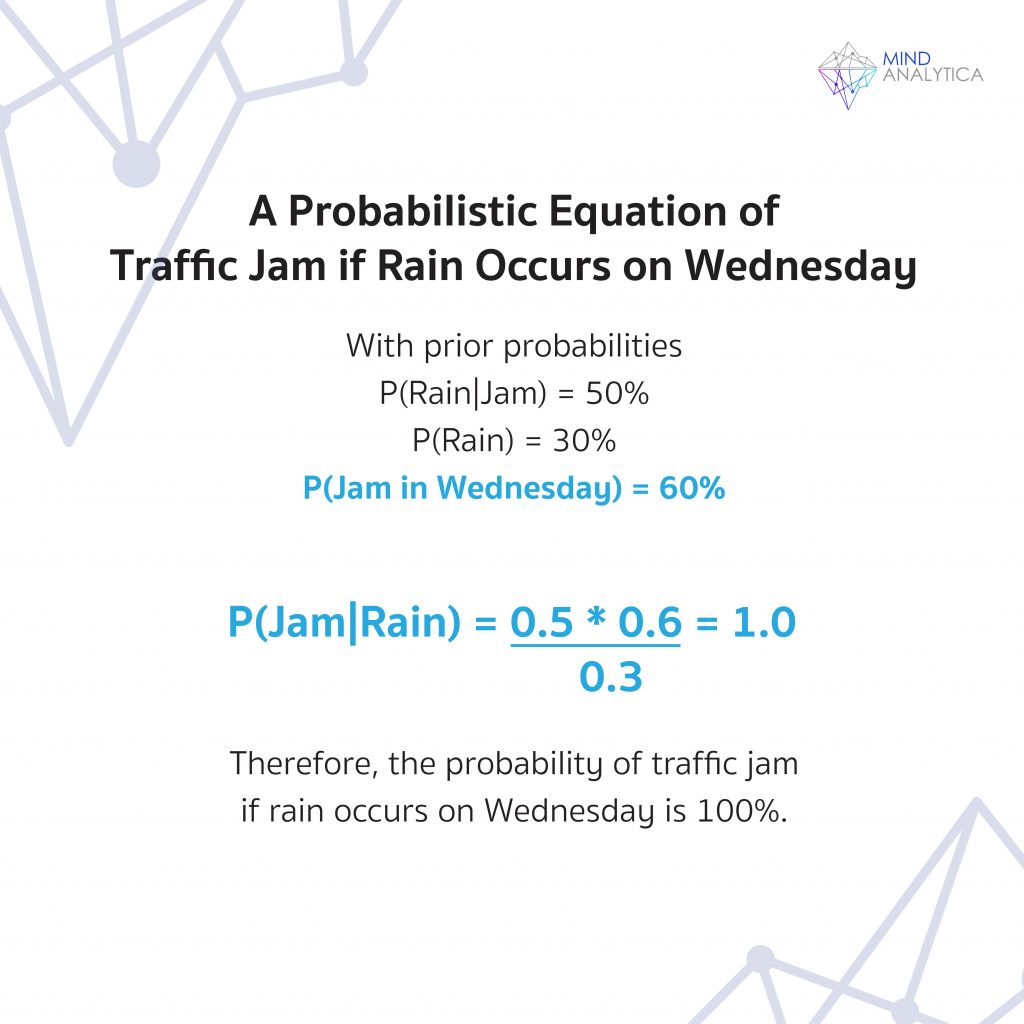

As for p(traffic jam), this is the information that a person estimates based on their experience. Mathematicians call this probability the prior probability. Suppose today is a Friday evening, and we estimate the chance of encountering a traffic jam (regardless of whether it rains) is 60%. We leave the house and find that it's raining. The probability of a traffic jam due to the additional information that it's raining is as follows:

p(traffic jam | rain) = 0.5 * 0.6 / 0.3 = 1.0

If we don't know whether it will rain, the chance of encountering a traffic jam is 60%. But if we already know that it's raining, the possibility of facing a traffic jam is 100% - there will definitely be a traffic jam!

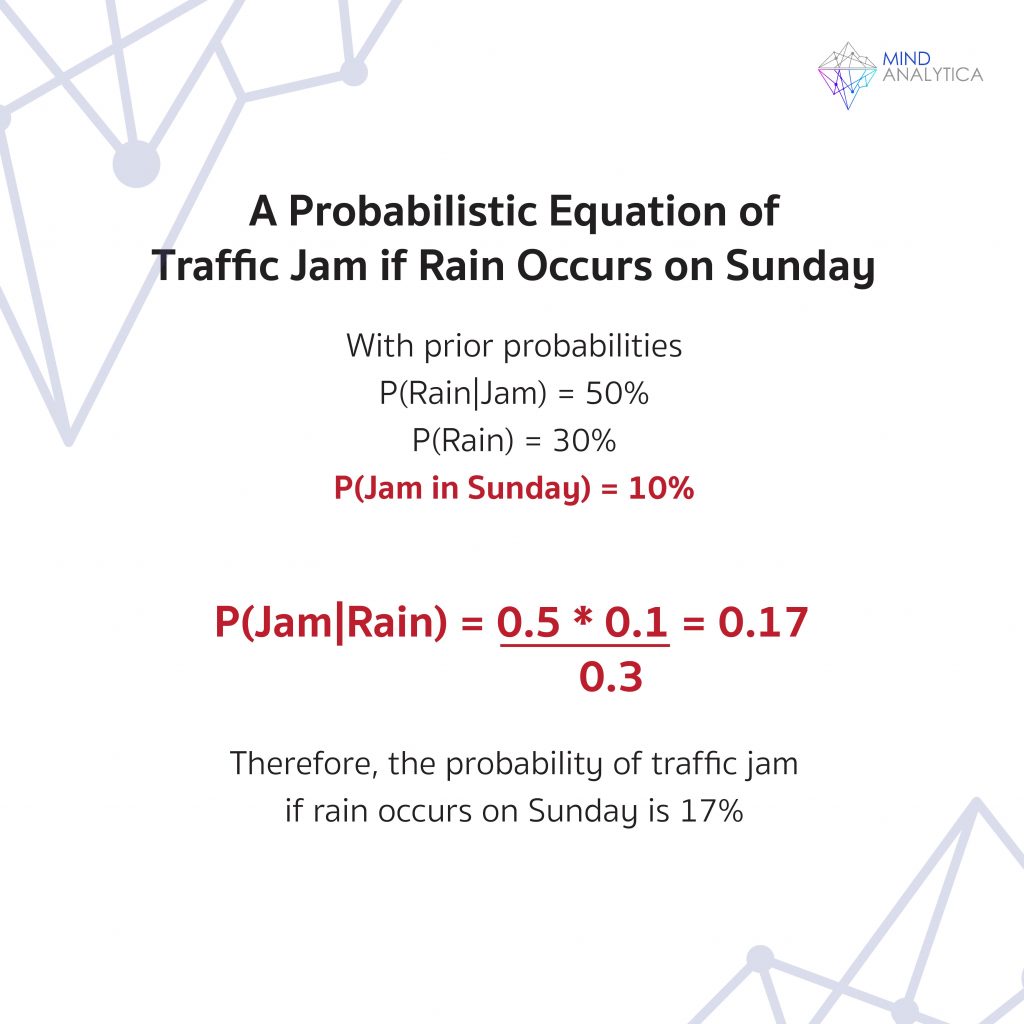

Here's another example: let's assume today is Sunday, and the probability of encountering traffic (regardless of whether it's raining) is 10%. We leave the house, and it happens to be raining. The probability of encountering traffic due to knowing the additional information that it's raining is as follows:

p(traffic | raining) = 0.5 * 0.1 / 0.3 = 0.17

If we don't know whether it will rain, the chance of encountering traffic is 10%. But if we know it's raining, the possibility of facing traffic is 17%, which is a higher probability. However, there's still a significant chance of not encountering traffic.

The Bayesian theory has many practical applications, especially in decision-making, such as stock investment decisions or determining if a patient has a particular illness.

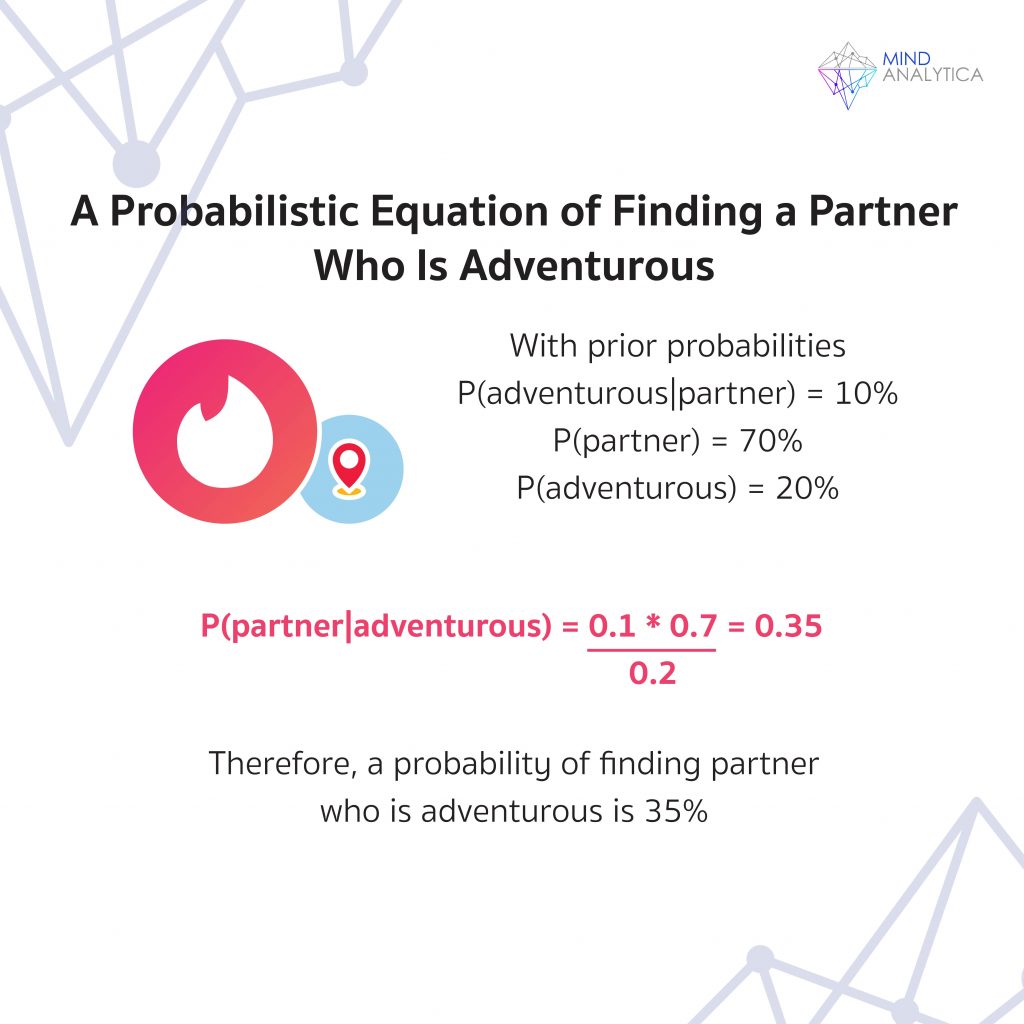

Let's consider another relatable example: suppose we want to know the probability that the partner we choose will stay with us for a long time; let's call it p(soulmate). You might know that among the people who can be with you for a long time, they should not enjoy adventurous travels, let's call it p(enjoys adventure | soulmate), assuming it's 10%. If you meet someone who enjoys adventure, what is the probability that they are suitable to be your partner or p(soulmate | enjoys adventure)?

To use the formula, you need to find the probability of meeting someone who enjoys adventurous travels; let's assume it's 20% of the people around you who enjoy an adventure. You also need to find the probability of finding a soulmate; let's assume that you have met someone and you don't know yet if they enjoy adventure or not; you think there's a 70% chance they are your soulmate. But when you discover that they enjoy adventure, what is the probability that the person is your soulmate?

p(soulmate | enjoys adventure) = p(enjoys adventure | soulmate) * p(soulmate) / p(enjoys adventure) = 0.1 * 0.7 / 0.2 = 0.35

With the additional information that they enjoy adventure, the probability that they will be your soulmate is reduced to 35%.

Of course, you can add more information to update the chances of someone being your soulmate, such as p(soulmate | adventurous, has a master's degree) or p(soulmate | adventurous, has a master's degree, likes rock music). As more information is added, it will continuously update the chances of finding your soulmate. And when using Bayes' theorem to calculate probabilities based on this additional information, the more data you have, the closer the result will be to 0 or 1, which will help you decide whether to choose the person you consider as a life partner or not.

Although the accuracy of decision-making using Bayes' theorem relies on the accuracy of the prior probabilities, if you have more and more information, the reliance on prior probabilities decreases, and the result will be based more on the data. For example, having knowledge about being adventurous, having a master's degree, and liking rock music will reduce the impact of prior probabilities compared to having information about being adventurous alone.

Bayes' theorem is a theory that closely resembles human decision-making. If there is little information, we rely on our assumptions (in the form of prior probabilities). But when there is more information, the outcome of the decision is based more on the data than on our assumptions. Try to think about it: if you are going to open a restaurant, what are the chances of success? Then add information like whether you can cook, have experience managing people, and have a good location without renting. The additional information will help you make more accurate decisions about whether your restaurant will be successful or fail.

Making decisions using Bayes' theorem by gradually adding information like this can be a principle for making difficult decisions. Your thinking framework will become more precise, resulting in more accurate decision-making.